This afternoon around 14:44, NetEase Cloud Music experienced an outage, recovering at 17:11. The rumored cause was infrastructure/cloud/ disk storage related issues.

Incident Timeline#

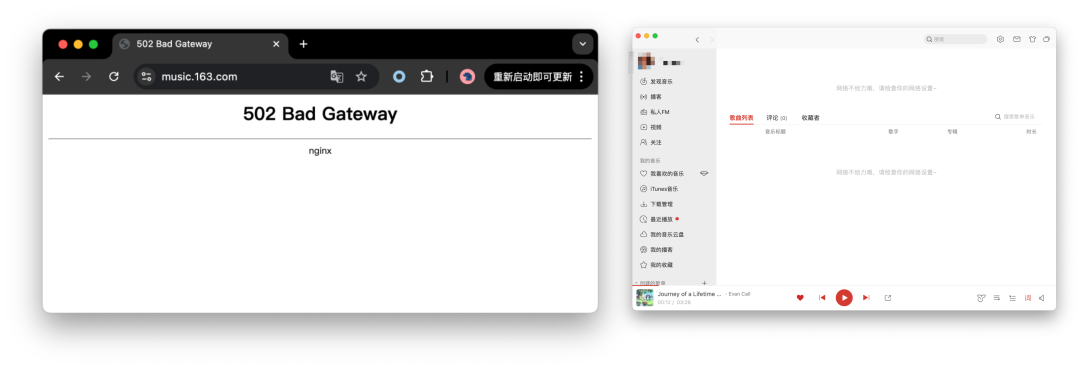

During the outage, NetEase Cloud Music clients could normally play offline downloaded music, but accessing online resources resulted in direct error messages, while the web version showed 502 server errors and was completely inaccessible.

During this period, NetEase’s 163 portal also experienced 502 server errors and later redirected to the mobile version. Some users also reported that NetEase News and other services were affected.

Many users thought their internet was down when they couldn’t connect to NetEase Cloud Music, leading some to uninstall and reinstall the app, while others assumed their company IT had blocked music streaming sites. Various comments quickly pushed this outage to Weibo trending topics:

The outage lasted until 17:11 when NetEase Cloud Music recovered, and the 163 main portal switched back from mobile to desktop version. The total outage duration was approximately two and a half hours — a P0 incident.

At 17:16, NetEase Cloud Music’s Zhihu account posted an apology notice, stating that searching for “enjoy music” tomorrow would provide a 7-day Black Vinyl VIP friend fee.

Root Cause Analysis#

During this period, various rumors and hearsay emerged. Headquarters on fire 🔥 (old photos), TiDB crash (netizen speculation), downloading Black Myth: Wukong overloading the network, and programmers deleting databases and fleeing were obviously fake news.

However, there was a previously published article from NetEase Cloud Music’s official account “Cloud Music Guizhou Data Center Migration Overall Plan Review” and two detailed leaked chat records that serve as references.

The rumored cause relates to cloud storage issues. While I won’t post the leaked chat records, you can refer to screenshots in articles like “NetEase Cloud Music Down, Cause Exposed! Data Center Migration Completed in July, Rumored Related to Cost Reduction” or authoritative media coverage “Exclusive | NetEase Cloud Music Outage Truth: Technical Cost Reduction, Insufficient Staff Took Half a Day to Troubleshoot”.

We can find some public information about NetEase’s cloud storage team, for example, NetEase’s self-developed cloud storage solution Curve project was terminated.

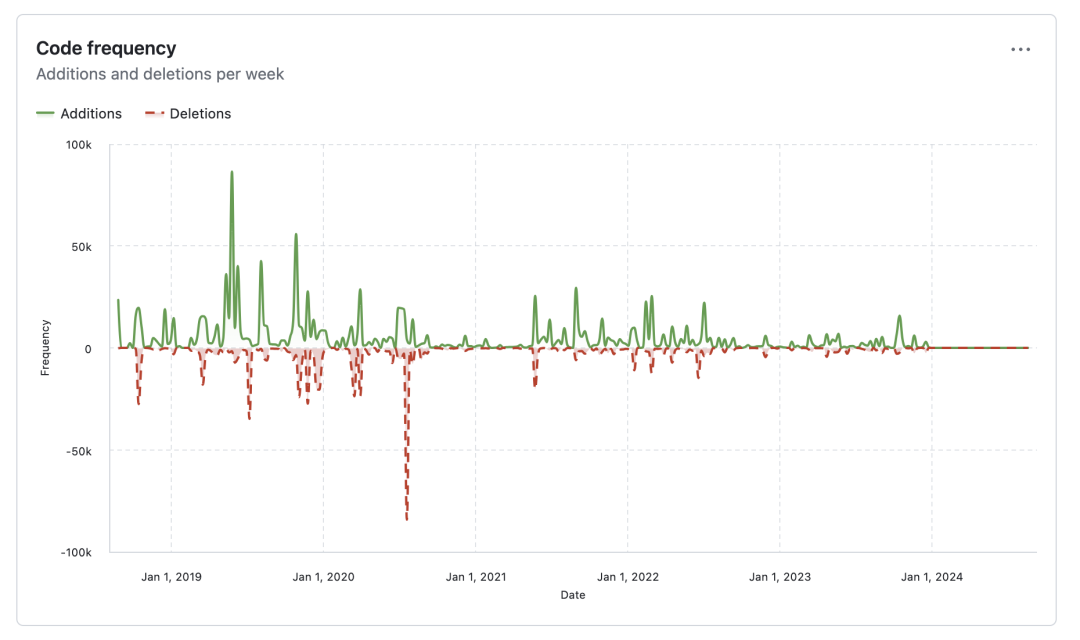

Checking the Github Curve project homepage, we find the project has been stagnant since early 2024:

The last release remains at RC without an official version, and the project has essentially become unmaintained, entering silent mode.

The Curve team leader also published an article “Curve: Regretful Farewell, Unfinished Journey” on their official account, which was subsequently deleted. I had some impression of this because Curve was one of two open-source shared storage solutions recommended by PolarDB, so I specifically researched this project. Now it seems…

Lessons Learned#

We’ve discussed layoffs and cost reduction many times before. What additional lessons can we learn from this incident? Here are my thoughts:

The first lesson is: Don’t run serious databases on cloud disks! On this matter, I can indeed say “Told you so”. Underlying block storage is primarily used for databases. If failures occur here, the blast radius and debugging difficulty far exceed the intellectual bandwidth of typical engineers. Such significant outage duration (two and a half hours) clearly wasn’t a stateless service problem.

The second lesson — Self-developed solutions are fine, but keep people around to maintain them. Cost reduction eliminated the entire storage team, leaving no one to help when problems arose.

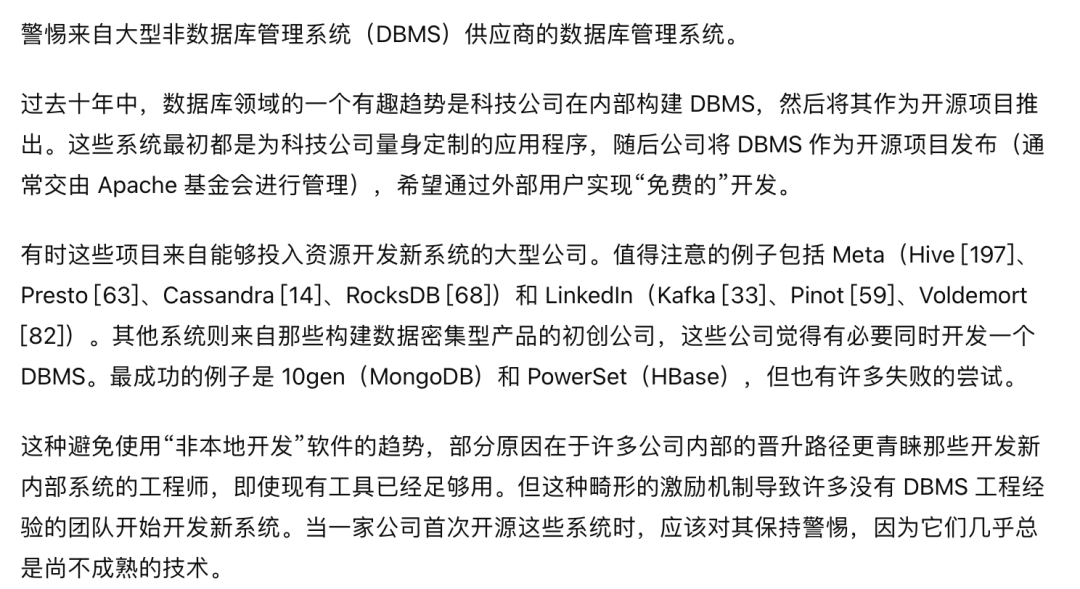

The third lesson: Beware of big company open source. As an underlying storage project, once deployed, it’s not something you can simply replace. When NetEase killed the Curve project, all infrastructure using Curve became unmaintained ruins. Stonebraker mentioned this in his famous paper “What Goes Around Comes Around”:

Reference Reading#

GitHub Global Outage, Another Database Rollover?

Alibaba-Cloud Down Again, This Time Cable Cut?

Global Windows Blue Screen: Both Parties Are Amateur Hour

Database Deletion: Google Cloud Wiped Out Fund’s Entire Cloud Account

Cloud Dark Forest: Bankrupting AWS Bills with Just S3 Bucket Names

Internet Tech Master Crash Course

How State Enterprises Inside View Cloud Vendors Outside

Alibaba-Cloud Stuck at Government Enterprise Customer Gates

Amateur Hour Behind Internet Outages

Cloud Vendors’ View of Customers: Poor, Idle, and Needy

taobao.com Certificate Expired

Are Cloud SLAs Placebo or Toilet Paper Contracts?

Luo Yonghao Can’t Save Toothpaste Cloud

Outages Aren’t Why Tencent Cloud Is Amateur Hour, Arrogance Is

Tencent Cloud Computing Epic Second Rollover

Redis Going Closed Source Is a Disgrace to “Open-Source” and Public Cloud

Analyzing Cloud Computing Costs: Did Alibaba-Cloud Really Lower Prices?

What Can We Learn from Tencent Cloud’s Post-Mortem?

Tencent Cloud: Face-Lost Amateur Hour

From “Cost Reduction LOL” to Real Cost Reduction and Efficiency

Alibaba-Cloud Weekly Explosion: Cloud Database Management Down Again